A Comparison of Supervised Learning Algorithm

Contributed by Amy(Yujing) Ma. She is currently in the NYC Data Science Academy 12 week full time Data Science Bootcamp program taking place between January 11th to April 1st, 2016. This post is based on her own class note and previous machine learning research. She posted this research on the 8th week of the program.

Which supervised learning algorithm is the best? For people who just start their machine learning journey, this question always comes to their mind.

To answer this question,we used 4 different types of data sets (One for regression problem, and the other 4 for binary classification problem) to test 6 supervised learning algorithms: SVMs (linear and kernel), neural networks, logistic regression, gradient boosting, random forests, decision trees, bagged trees, boosted trees, linear ridge regression. And also applied model averaging to improve the models.

| Table 1. Description of Data Sets | |||||

| Type | Data sets | # Predictor

Attributes |

Train Size | Test Size | Class Distribution

|

| Regression | Boston Housing | 13 | 253 | 253 | - |

| Binary classification | Wdbc | 30 | 300 | 269 | Malignant: 212 Benign:357 |

| Hypothyroid

(has missing values) |

34 | 1956 | 1134 | Hypothyroid: 149negative:2941 | |

| Ionosphere

(delete the first 2 predictors) |

34->32 | 220 | 131 | Good:225

Bad:126 |

|

For the parts of the methods, Boston housing is trained twice, once with original attributes and once with scaled data. Also, to test the performance of logistic regression, Boston housing has been converted to a binary problem by treating the value of “MEDV” larger than its median as positive and the rest as negative.

Data Cleaning

Hypothyroid has lots of missing values, and 18 of 34 predictors are binary. The information on missing values and how many unique values there are for each variable are shown as below (only shows the results of training data):

[code lang="r"]

sapply(hyp,function(x) sum(is.na(x)))

V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 V11 V12 V13 V14 V15 V16 V17 V18 V19 V20 V21 V22 V23 V24 V25 V26

0 277 44 0 0 0 0 0 0 0 0 0 0 0 0 278 0 429 0 152 0 151 0 150 0 1840

sapply(hyp, function(x) length(unique(x)))

V1 V2 V3 V4 V5 V6 V7 V8 V9 V10 V11 V12 V13 V14 V15 V16 V17 V18 V19 V20 V21 V22 V23 V24 V25 V26

2 89 3 2 2 2 2 2 2 2 2 2 2 2 2 202 2 67 2 245 2 151 2 244 2 44

[/code]

A visual take on the missing values in this data set might be helpful:

We replaced the missing values with the mean in the continuous variables. Since there are few missing values in the binary variable “Sex”, and replacing by the variable’s median or mean will cause bias, we deleted these 73 records. (training:44, test:29)

For the ionosphere dataset which contains 34 predictors, we have eliminated the two first features, because the first one had the same value in one of the classes and the second feature assumes the value equals to 0 for all observations.

Parameter Tuning Results by Datasets

I tuned the parameter by using 70/30 splits for training data and compared different models’ cross-validation error . In addition, this report also used the Caret package in R for tuning the gradient boosting and random forest model. This section summarizes the tuning procedures and the results of each variable. By interpreting these results, I will choose the appropriate parameters for each algorithm.

. In addition, this report also used the Caret package in R for tuning the gradient boosting and random forest model. This section summarizes the tuning procedures and the results of each variable. By interpreting these results, I will choose the appropriate parameters for each algorithm.

Regression Problem (Boston Housing)

1. Gradient Boosting

The tuning result shows that whether data is scaled doesn’t affect the gradient boosting model. When tree-depth=5, the cross validation error is the smallest.

is the smallest.

By using caret package, we confirm that the best combination is: shrinkage=0.001, number of trees=300, n.minobsinnode = 10 and tree-depth=5. Part of the results are shown as below:

[code language="r"]

gbmFit1

Stochastic Gradient Boosting

Gradient Boosting

253 samples

13 predictor

No pre-processing

Resampling: Repeated Train/Test Splits Estimated (25 reps, 0.7%)

Summary of sample sizes : 180, 180, 180, 180, 180, 180, ...

: 180, 180, 180, 180, 180, 180, ...

Resampling results across tuning parameters:

shrinkage interaction.depth n.minobsinnode n.trees RMSE Rsquared RMSE SD Rsquared SD

0.001 1 10 15 8.337091357 0.6640256183 0.7941402762 0.09248626694

0.001 1 10 30 8.265578098 0.6722929163 0.7915189328 0.08870257422

0.001 1 10 45 8.195669943 0.6778771101 0.7895308048 0.08435275865

RMSE was used to select the optimal model using the smallest value.

The final values used for the model were n.trees = 300, interaction.depth = 5, shrinkage = 0.01 and n.minobsinnode = 10.

[/code]

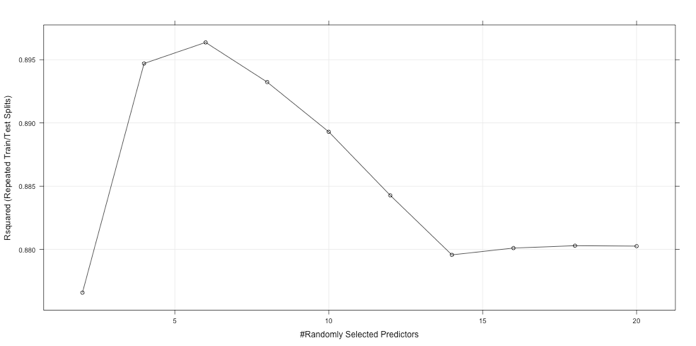

2.Random Forest

The tuning result shows the cross-validation error and R-squared value, with different numbers of independent variables used to include in a tree construction. When mtry=6, the cross validation error is the smallest.

and R-squared value, with different numbers of independent variables used to include in a tree construction. When mtry=6, the cross validation error is the smallest.

The results of caret package confirmed the choice.

[sourcecode language="r"]

rfFit1

Random Forest

253 samples

13 predictor

No pre-processing

Resampling: Repeated Train/Test Splits Estimated (25 reps, 0.7%)

Summary of sample sizes: 180, 180, 180, 180, 180, 180, ...

Resampling results across tuning parameters:

mtry RMSE Rsquared RMSE SD Rsquared SD

2 3.290959961 0.8765870076 0.5079010701 0.01964500327

4 2.914160845 0.8946978414 0.3797723734 0.02067581818

6 2.826270709 0.8963652917 0.3505325664 0.02565374865

8 2.828542294 0.8932305921 0.3384923951 0.02829620363

10 2.856976760 0.8893013207 0.3504001443 0.02988188614

12 2.909261177 0.8842750778 0.3543389148 0.03184843971

14 2.964247468 0.8795746376 0.3713993793 0.03205890656

16 2.957650138 0.8801113129 0.3717238723 0.03240662814

18 2.955660322 0.8802977349 0.3745751188 0.03249307098

20 2.955327699 0.8802633254 0.3646805880 0.03206477136

RMSE was used to select the optimal model using the smallest value.

The final value used for the model was mtry = 6.

[/sourcecode]

3. Neural Networks

In this section, we used both original and scaled data, to find the difference. The scaled data’s cross validation error is smaller. So we chose the neural networks with resilient back propagation, and the number of neurons in the hidden layer for the training process should be 6.

4.SVM (linear and kernel)

Since all the kernel methods are based on distance, we need to scale the data set before applying to SVM model. The tuning result shows the cross-validation error with different kernel methods. Since SVM will overfit training data easily, we will select the linear SVM with the cost=3.2.

4.Linear ridge regression

For linear ridge regression, the result shows that we should choose lambda=0.01. We can reduce this error by choosing the features, so rerun the same tuning process by choosing features=[ 6 11 8 10 13 ]

For those 4 classification problems, we also did the same processes as we mentioned above. Instead of going over the details, we would only show the model selection result of the four classification problems as following:

| Table 1. Model Selection Results by Data Sets | |||

| Wdbc | Ionosphere | Hypothyroid | |

| Gradient Boosting(n.trees, depth, n.minobsinnode) | 500,9,10 | 450,9,15 | 300,4,10 |

| Random Forest(mtry) | 6 | 16 | 18 |

| Neural Networks(num. of neurons in the hidden layer) | 10 | 5 | 3 |

| SVM(type, param (degree/sigma),cost) | Linear,1,0.32 | Radial, 5,1 | Linear,1,1.32 |

| Ridge regression (lambda) | 0.0001 | 0.0001 | 100000 |

| Logistic regression | No parameter to tune | ||

| Model Averaging (logistic regression)

(AIC for the 3 model) |

3 logistic model

(AIC:31.0;35.9;41.3) |

3 logistic model

(AIC: 217.2; 231.6; 248.8) |

3 logistic model

(AIC: 176.28; 176.64; 177.79 ) |

Model Averaging

To improve the performances of linear ridge regression and logistic regression, we used the R packages glmulti and MuMIn for model averaging. For each data set, we chose the best 3 or 5 models to do model averaging to see if it will improve the performance of linear ridge regression (for regression) and logistic regression (for classification).

1.Regression Problem (Boston Housing)

We selected the top 5 ridge regression models based on their AIC scores:

[code language="r"]

weightable(avg.model)

model aicc weights

1 y ~ 1 + CRIM + NOX + RM + AGE + DIS + TAX + PTRATIO + B + LSTAT 1327.777772 0.056535233839

2 y ~ 1 + CRIM + ZN + NOX + RM + AGE + DIS + RAD + TAX + PTRATIO + B + LSTAT 1328.092560 0.048301873324

3 y ~ 1 + CRIM + ZN + NOX + RM + AGE + DIS + TAX + PTRATIO + B + LSTAT 1328.147654 0.046989452154

4 y ~ 1 + CRIM + NOX + RM + AGE + DIS + RAD + TAX + PTRATIO + B + LSTAT 1329.091435 0.029313049215

5 y ~ 1 + CRIM + NOX + RM + AGE + DIS + TAX + PTRATIO + B 1329.205842 0.027683297814

[/code]

By averaging the top 5 models, the results shows the variables {CRIM, RM, AGE, DIS, TAX, PTRATIO, B}are all significant in both full-averaged model and conditional- averaged model

2.Classification Problem

For the classification problem, we choose 3 logistic model based on their AIC scores, and do model averaging. The results are shown as below:

- Wdbc

| Table 3.1 Model Averaging Results of Wdbc | |

| Model 1 | y ~ V4 + V8 + V9 + V15 + V22 + V23 + V29 + V30 + V32 |

| Model 2 | y ~ V3 + V4 + V8 + V9 + V15 + V22 + V23 + V29 + V30 + V32 |

| Model 3 | y ~ V3 + V4 + V7 + V8 + V9 + V15 + V22 + V23 + V29 + V30 + V32 |

| Variable importance | V15 V22 V23 V29 V30 V32 V4 V8 V9 V3 V7

Importance: 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 0.31 0.08 N containing models: 3 3 3 3 3 3 3 3 3 2 1 |

| Note | The model-averaged coefficients for both full-averaged model and conditional- averaged model, no variables are significant. |

- Ionosphere

| Table 3.2 Model Averaging Results of Ionosphere | |

| Model 1 | y ~ V3 + V4 + V5 + V6 + V8 + V9 + V10 + V11 + V13 + V14 + V15 + V16 + V18 + V22 + V23 + V26 + V27 + V30 + V31 |

| Model 2 | y ~ V3 + V4 + V5 + V6 + V8 + V9 + V10 + V11 + V13 + V14 + V15 + V16 + V18 + V22 + V23 + V24 + V26 + V27 + V28 + V30 + V31 |

| Model 3 | y ~ V3 + V4 + V5 + V6 + V8 + V9 + V10 + V11 + V13 + V14 + V15 + V16 + V18 + V22 + V23 + V24 + V26 + V27 + V30 + V31 |

| Variable importance | V10 V11 V13 V14 V15 V16 V18 V22 V23 V26 V27 V3 V30 V31 V4 V5 V6 V8 V9 V24 V28

Importance: 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 1.00 0.53 0.23 N containing models: 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 2 1 |

| Note | The results shows the variables { V14 V15 V16 V22 V23 V26 V27 V3 V30 V31 V4 V5 V9 }are all significant in both full-averaged model and conditional- averaged model |

- Hypothyroid

| Table 3.3 Model Averaging Results of Hypothyroid | |

| Model 1 | y~ V4 + V6 + V14 + V15 + V16 + V24 |

| Model 2 | y ~V4 + V6 + V7 + V14 + V15 + V16 + V24 |

| Model 3 | y~ V4 + V6 + V7 + V14 + V15 + V16 + V17 + V24 |

| Variable importance | Relative variable importance:

V14 V15 V16 V24 V4 V6 V7 V17 Importance: 1.00 1.00 1.00 1.00 1.00 1.00 0.57 0.20 N containing models: 3 3 3 3 3 3 2 1 |

| Note | The results shows the variables { V16 V24 }are all significant in both full-averaged model and conditional- averaged model |

Performances by Data sets

The tables and plots below show the estimate accuracy based on the hold-out test data set. The best performing model for each data set is boldfaced while the worst one is italic.

1.Boston Housing

Below shows the MSE of test data set for Boston Housing data, the Random Forest model has the lowest MSE 32.64723. The ridge regression performs the worst, with MSE is 303.57214. But we can improve the ridge regression model by using model averaging (with MSE is 264.95846) and feature selection (with MSE is 55.51494).

| Table 4. MSE(test) for Boston Housing Data | ||

| Data sets | MSE (test) | Note |

| Gradient Boosting | 33.30788 | |

| Random Forest | 32.64723 | |

| Neural Networks | 87.52996 | Used scaled data set |

| SVM | 97.98975 | Used scaled data set |

| Ridge regression | 303.57214 | 1. Used scaled data set

2. The test error can be reduced to 55.51494 with feature selection |

| Logistic regression | 0.69170 | |

| Model Averaging

(linear ridge regression) |

264.95846 | |

2. Classification Problems’ GINI (test) by Data Sets

In this table, we show the GINI of test data sets for classification problems, random forests performs the best on both Wdbc and Hypothyroid data sets; while the best model on Ionosphere is SVM.

Overall, the gradient boosting has the best average performance. In addition, the linear ridge regression has the poorest performance, due to it's not quite suitable for classification problems. Also, the logistic regression model has a very bad average performance, but it performs very well on Hypothyroid. Just as in the No Free Lunch Theorem, there is no best learning algorithm for all data sets.

The averaging models of Wdbc and Hypothyroid have improved the performance over the original logistic regression, while the model for Ionosphere didn't.

| Table 5. Classification Problems’ GINI (test) by Data Sets | ||||

| Wdbc | Ionosphere | Hypothyroid | Average performance | |

| Gradient Boosting | 0.033457249070632 | 0.0610687022900763 | 0.0167548500881834 | 0.0370936004829639 |

| Random Forest | 0.0260223048327138 | 0.0763358778625954 | 0.0149911816578483 | 0.0391164547843858 |

| Neural Networks | 0.0446096654275093 | 0.0687022900763359 | 0.027336860670194 | 0.0468829387246797 |

| SVM | 0.0371747211895911 | 0.0534351145038168 | 0.027336860670194 | 0.039315565454534 |

| Ridge regression | 0.5278810409 | 0.1832061069 | 0.5987654321 | 0.436617526633333 |

| Logistic regression | 0.0594795539 | 0.1297709924 | 0.02557319224 | 0.0716079128466667 |

| Model Averaging

(logistic regression) |

0.04832713755 | 0.1374045802 | 0.02469135802 | 0.0701410252566667 |

3. ROC plots for Classification Problems

For the 3 classification problems, SVM, random forests, and gradient boosting have excellent performance on the area under the ROC. While Logistic regression and ridge regression performs the poorest. Comparing these plots, Wdbc’s models tend to have larger areas under the ROC curve than those for Ionosphere. These can be also shown by the GINI of test data (in Table 5).

Conclusion

With the excellent performance on all 4 data sets, gradient boosting and random forests are the best algorithms overall.

| Table 6. Difference Between the Algorithms | |||||

| Problem Type | Training Speed | Prediction Speed | Data need scaling? | Handle lots of features well? | |

| Gradient Boosting | Either | Slow | Fast | No | Yes |

| Random Forest | Either | Slow | Moderate | No | Yes |

| Neural Networks | Either | Slow | Moderate | Yes | Yes |

| SVM (with kernel) | Either | Fast | Fast | Yes | Yes |

| Ridge regression | Regression | Fast | Fast | Yes | No(need feature selection) |

| Logistic regression | Classification | Fast | Fast | No (unless regularized) | No(need feature selection) |

For regression problems, the traditional linear ridge regression can be improved with model selection and model averaging. While using logistic regression to classify the response is not a good idea. For classification problems, SVM, gradient boosting and random forests perform very well. And the model averaging doesn’t improve all the logistic regression performance in each data set.

Just as Rich Caruana and Alexandru Niculescu-MizilEven mentioned in their great paper:

"The best models sometimes perform poorly, and models with poor average An Empirical Comparison of Supervised Learning Algorithms performance occasionally perform exceptionally well."